In today’s business landscape, AI represents both a revolutionary opportunity and a significant challenge. As organizations integrate AI capabilities, many discover that managing AI risks requires a comprehensive approach beyond traditional security measures. Let’s explore how to build an effective AI risk management framework that protects your business while unlocking AI’s transformative potential.

The Associated Risks of Using AI

The challenges associated with AI implementation span multiple domains, creating a complex puzzle for businesses seeking to harness this powerful technology. Based on the analysis of industry trends, the most pressing concerns include:

Data Security and Privacy Vulnerabilities

AI systems require vast amounts of data to function effectively, creating significant security challenges. The indirect relationship between prompts and responses makes data security much more complex than in traditional systems.

The reality is that without proper controls, sensitive information can be exposed through model outputs, even when that behavior wasn’t intended. A seemingly innocent series of prompts might extract confidential data if your safeguards aren’t robust enough.

AI Hallucinations and Misinformation

One of the most concerning aspects of generative AI is its tendency to produce “hallucinations” — information presented as factual that is actually inaccurate or misleading.

These fabrications can devastate your company’s public image, trigger financial losses, create technical complications, and even expose you to legal liability. Without a clear chain of logic between data sources and AI results, your business remains vulnerable.

Ethical and Reputational Risks

AI systems can reinforce bias from training data, potentially leading to discriminatory outcomes and serious reputational damage. This bias can appear at any stage—from data collection to algorithm design to deployment—putting your organization at risk of both regulatory penalties and public backlash. The mere perception that a company does not use AI ethically or legally can cast its products and services in a negative light.

Governance and Compliance Challenges

The regulatory landscape for AI is evolving rapidly, with frameworks like the EU AI Act and the NIST AI Risk Management Framework gaining prominence. Your organization must navigate this changing environment proactively rather than reactively.

For African businesses, especially those operating digitally or serving international clients, aligning with global and local data regulations is not just advisable—it’s essential. AI systems should be designed to comply with laws such as:

- EU AI Act – The most comprehensive and strict AI regulation so far. It applies to any business, including those outside Europe, offering AI-powered services or tools to users within the EU.

- GDPR (General Data Protection Regulation) – Affects African businesses that collect or process the personal data of EU residents. It mandates transparency in data use and requires companies to document how AI models make decisions.

- SOC 2 for AI – A recognized standard for demonstrating security, availability, and confidentiality of AI systems, often required by global clients.

- Kenya Data Protection Act (2019) – A local law modeled on GDPR, it regulates the collection, storage, and use of personal data in Kenya. Kenyan businesses using AI must ensure lawful data processing, obtain consent, and maintain transparency in algorithmic decision-making.

Adopting a risk-aware, compliance-first approach to AI ensures your business remains trustworthy, avoids regulatory penalties, and is prepared for growth in both local and international markets.

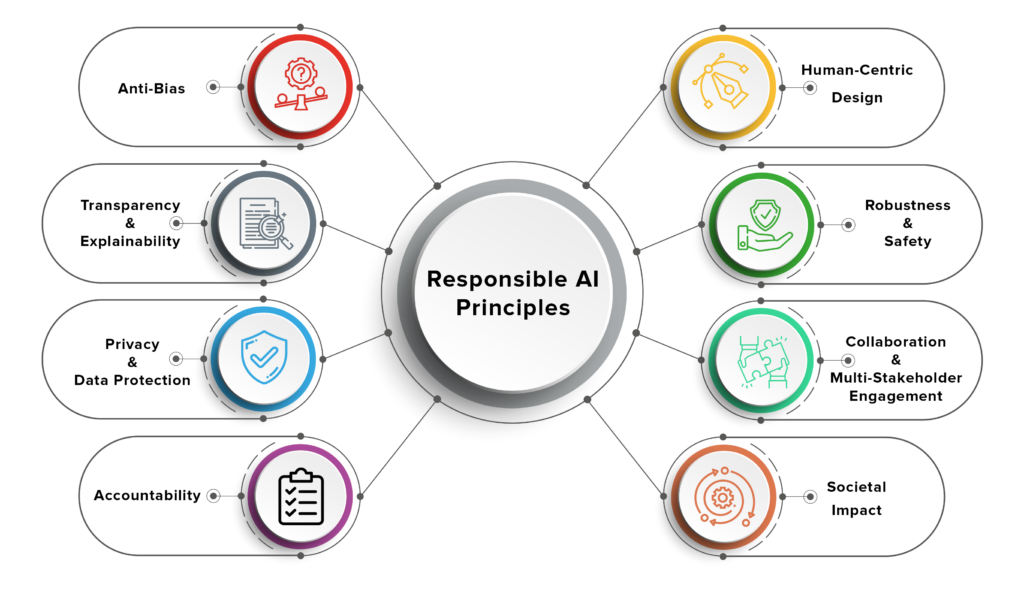

Building Your “Responsible AI by Design” Framework

To address these risks effectively, your organization needs a comprehensive approach that embeds responsibility, security, and governance into your AI strategy from day one. Here’s a proven framework:

1. Establish a Governance Structure with Multidisciplinary Oversight

Create a centralized AI governance board that brings together stakeholders from various disciplines. The enhanced risks of AI demand collaboration to ensure every angle is understood and addressed.

This governance structure should include representatives from:

- Legal and compliance

- Risk management

- IT security

- Business leadership

- Data science and AI engineering

The hub-and-spoke model works particularly well here—central governance ensures all pieces of the puzzle fit together while allowing for specialized implementation across business units.

2. Implement Robust Access Controls and Security Measures

Protecting AI systems requires a number of security approaches:

- Least privilege access: Restrict user, API, and systems access based on necessity

- Zero trust architecture: Continuously verify all interactions with AI models

- API monitoring: Detect unusual patterns that might indicate misuse

- Data integrity protection: Prevent modifications that could corrupt model outputs

Without these strict controls, adversaries can alter the output and trustworthiness of your AI models, potentially compromising your entire operation.

3. Adopt Continuous Monitoring and Testing

AI systems require ongoing vigilance and testing to maintain security and performance:

- Model drift detection: Identify changes in model behavior that might indicate unauthorized alterations

- Inference refusal tracking: Ensure models appropriately reject inappropriate queries

- Fairness testing: Regularly audit for bias in model outputs

- Prompt and output logging: Maintain audit trails for sensitive AI-generated decisions

These monitoring strategies help catch potential issues before they become crises.

4. Take a Risk-Based, Incremental Approach to Deployment

Instead of rushing to implement AI across all business functions, take a measured approach. Deploy AI in non-critical systems first, then expand as your security controls mature.

This incremental strategy allows your organization to:

- Test security controls in lower-risk environments

- Build internal expertise gradually

- Develop appropriate governance policies based on real-world experience

- Create an AI incident response plan before critical deployments

This approach isn’t about slowing innovation, it’s about ensuring a sustainable and secure growth of your AI capabilities.

5. Invest in Transparency and Accountability

Building trust in AI systems requires transparency about how decisions are being made. At every level, keep communication open to ensure trust in your AI systems.

Key elements include:

- Using model registries to track AI model lifecycles

- Maintaining an AI Bill of Materials (AIBOM) documenting AI dependencies

- Implementing explainable AI approaches where possible

- Keeping humans involved in critical decision processes

Human oversight remains absolutely essential. It is the cornerstone of maintaining transparency and building trust in your AI systems.

Transforming Risk into Opportunity

The organizations that will thrive in the AI era aren’t those avoiding risk entirely but those implementing thoughtful frameworks to manage risk effectively. By adopting a “responsible AI by design” approach, you transform potential challenges into competitive advantages:

- Enhanced customer trust: Demonstrating responsible AI use builds confidence in your products and services

- Regulatory readiness: Proactive governance prepares you for evolving compliance requirements

- Innovation with guardrails: Security-conscious implementation enables faster, safer deployment

- Reduced likelihood of costly incidents: Comprehensive risk management minimizes the chance of reputational damage

Building Your AI Future Today

The journey toward secure, responsible AI implementation isn’t a one-time project but an ongoing commitment to excellence. Human oversight must remain front and center.

By developing robust governance structures, implementing comprehensive security controls, and fostering a culture of responsible innovation, your organization can harness AI’s transformative potential while mitigating its risks.

The businesses that will lead tomorrow are those thinking critically about AI risks today. Are you building the framework that will position your organization for success in the AI-powered future?